Unreal Engine isn't ready for AI

Hackweek at Sentry this year was exactly as you’d expect: LLMs everywhere. In practice what that looks like is a lot of folks trying to build things in spaces that they’re less familiar with. For myself and my co-founder this continued our tradition of attempting to build games, this time with Unreal Engine. This time around I wanted to see how much I could rely on an LLM to push the boundaries of my skills as I had already experienced the effectiveness of this strategy when working on an iOS app recently.

This is going to be a story of the four-day journey of absolutely flailing.

#Day 1: Design

Whenever I start a new project now, especially in a technology that I haven’t worked with before, I focus a lot on coming up with pseudo specs. Usually this amounts to overarching design documents on how I want to approach product functionality or what the user experience should function like. I’ve also found it’s a great time to build technical implementation specs, dialing in design patterns, and effectively building up a set of docs that I can rely on for the rest of the project.

I wire up a few things (my current running experiments) to try to make this more effective. The first being a prompt injection that tries to coerce the LLM into relying on the docs more frequently:

// .claude/settings.json

{

"permissions": {

"allow": [],

"deny": []

},

"hooks": {

"UserPromptSubmit": [

{

"matcher": "*",

"hooks": [

{

"type": "command",

"command": "echo 'MANDATORY: If something is unclear, you MUST ask me. ALWAYS reference our docs when they are available for a task, and make a note when they aren't.'")

}

]

}

]

}

}This works to some degree, but you’re still generally reliant on the context window in front of you. With this in place I then begin to define my spec. Usually this is a few paragraphs. This time around I started off on ChatGPT with GPT5 Thinking. I found it slow, and not as familiar or reliable as I was used to (in previous projects I had better luck with O3). So I took a summary and moved it into Claude Code - after all I want these docs co-located with my project:

We’re building a game called System Breach. It’s based on the Bug Tracer board game from Sentry. This game is inspired by Phasmaphobia, and is a 4-player coop scenario based game, where players must identify and hunt a “bug” in the space station’s systems.

A dark, atmospheric space station with flickering lights, humming servers, and claustrophobic corridors. The bug isn’t just hiding—it’s actively corrupting systems, creating an escalating sense of dread.

After a bit of back and forth, the first set of artifacts came out to around ~3,000 lines of documentation. This is never perfect, and one of the difficult tasks working with agents is maintaining some amount of appropriate, and accurate documentation.

The initial overview (docs/game-design-overview.md) shows pretty well what we’re after:

# System Breach — Game Design Overview

## Elevator Pitch

System Breach is a first-person, co-op horror-investigation game where 1–4 players ("devs") hunt an invisible software bug destabilizing a space station. Like Phasmophobia, tension comes from gathering evidence under time pressure and escalating threats—but here the adversary has no body. It exists only through systemic effects: lights stutter, doors desync, networks hiccup, cooling reverses, comms distort. The board game Bug Tracer supplies worldbuilding (zone names, flavor, factions), not mechanics.

## Design Pillars

1. **Systemic horror, not creature horror**: Fear arises from corrupted systems, not a visible monster.

2. **Deduction under pressure**: Collect and validate evidence to identify the bug's archetype before it takes over.

3. **Readable escalation**: A visible Control meter drives Incident windows (30/60/85%), telegraphing danger and forcing choices.

4. **Asymmetric PvE (AI-only)**: A Director-style AI runs the bug—plotting node attacks, planting decoys, and responding to player behavior.

5. **World first**: Use Bug Tracer's canonical zone names and tone to ground maps and narrative dressing.

## Core Documentation

### Foundation

- **@terminology.md** - All game terms and definitions

- **@core-loop.md** - The 5-phase gameplay progression

- **@win-conditions.md** - Victory and failure states

### Systems

- **@evidence-systems.md** - Investigation mechanics and evidence classes

- **@ai-behavior-design.md** - Bug AI patterns and Director system

### Content

- **@scenarios/scenario-template.md** - Template for creating new scenarios

- **@zones.md** - Bug Tracer canonical zone names and descriptions

## Quick Reference

### Key Metrics

- **Control**: Bug's system takeover (0-100%)

- **Confidence**: Team's diagnostic certainty (0-100%)

- **Noise**: False positive contamination (caps Confidence)

### Escalation Thresholds

- **30% Control**: Minor Incident

- **60% Control**: Major Incident

- **85% Control**: Critical Incident

- **100% Control**: System Takeover (loss)

### Player Tools

- Investigation: Scanner, Analyzer, Thermal, Mic

- Defense: Firewall, Honeypot, Debug Console

### Victory Path

> **See @win-conditions.md for complete details**

Evidence → 90% Confidence → Weakness Protocol → Craft Trap → Deploy → Hold → Win

## Core Loop

> **See @core-loop.md for detailed phase breakdown**

Five phases: Deploy → Stabilize → Deduce → Commit → Execute

## Core Systems

- **@control-system.md** - Pressure and escalation (0-100%)

- **@confidence-system.md** - Investigation progress

- **@evidence-systems.md** - Four evidence classes

- **@incidents.md** - Escalation events at 30/60/85%

- **@node-system.md** - Infrastructure and corruption

- **@ai-behavior-design.md** - Director AI system

- **@bug-archetypes.md** - Enemy behavioral patterns

- **@player-tools.md** - Equipment and abilities

## What Comes From Bug Tracer (And What Doesn't)

**Comes from Bug Tracer**: Zone names, factions, terminal VO tone, glyphs, flavor text, lore artifacts (e.g., memory dumps, intel crates).

**Doesn't**: Core mechanics (evidence math, tools, AI behavior, win gates) are bespoke to System Breach.

## Content Structure

> **See @scenarios/scenario-template.md for full template**

Each scenario includes:

- Active zones from Bug Tracer canon

- Bug archetype with signature tells

- Node configuration (Major/Minor/Core)

- Evidence distribution tables

- Control pacing and Incident triggers

- Exploit Trap crafting requirements

## Constraints & Non-Goals

- **No "bug player"**: The adversary is AI-only and never visible.

- **No engine/tech decisions** in this doc; pure design.

- **Scoring is not a focus** right now; success/failure states and readability are.Over the next couple of hours I refined the spec: asking it questions and then prompting it to update docs to clarify what I wanted vs what it originally had written. Here’s the output of that first session leading up to lunch:

➜ ~/s/S/docs (cfa82fd) ✔ tree

.

├── ai-behavior-design.md

├── bug-archetypes.md

├── confidence-system.md

├── control-system.md

├── core-loop.md

├── doc-design.md

├── evidence-systems.md

├── game-design-overview.md

├── incidents.md

├── node-system.md

├── one-pager-game-overview.md

├── player-tools.md

├── scenarios

│ └── scenario-template.md

├── terminology.md

├── win-conditions.md

└── zones.mdI spent another 5 hours modularizing the docs and further refining them, until I felt like they had enough clarity that an LLM could use them for verification. To contrast with where we were at lunch break and where we ended up at the end of the first day’s session with game design, here’s the modularized tree:

.

├── archive

│ ├── ai-behavior-design.md

│ ├── bug-archetypes.md

│ ├── confidence-system.md

│ ├── control-system.md

│ ├── incidents.md

│ ├── investigation-systems.md

│ ├── node-system.md

│ └── one-pager-game-overview.md

├── balance-evaluation.md

├── bugs

│ ├── daemon.md

│ ├── index.md

│ ├── phantom.md

│ ├── poltergeist.md

│ └── wraith.md

├── core-loop.md

├── evidence-systems.md

├── game-design-overview.md

├── gameplay-summary.md

├── incidents

│ ├── auth-failure.md

│ ├── firewall-panic.md

│ ├── gravity-glitch.md

│ ├── incident-types.md

│ ├── index.md

│ ├── lockdown-protocol.md

│ ├── memory-overflow.md

│ ├── network-storm.md

│ ├── power-cascade.md

│ ├── thermal-runaway.md

│ └── ventilation-fault.md

├── meta

│ ├── bug-design-constraints.md

│ ├── documentation-guide.md

│ └── mystery-philosophy.md

├── patch-system.md

├── player-tools.md

├── programs

│ ├── corruption-scanner.md

│ ├── index.md

│ ├── log-analyzer.md

│ ├── memory-profiler.md

│ ├── network-sniffer.md

│ ├── process-monitor.md

│ ├── program-concepts.md

│ ├── signal-tracer.md

│ ├── stability-checker.md

│ └── thermal-monitor.md

├── progression-system.md

├── scenarios

│ └── scenario-template.md

├── terminals.md

├── terminology.md

├── win-conditions.md

└── zones.mdSuffice to say, it was a lot of words. 36,730 to be exact. Not all of this ended up being correct, but it was a great start.

That evening I spent a few hours trying to get Unreal up and running with Claude Code. So far I was just reading and writing markdown files - wildly different than the behavior I need to be successful here.

➜ ~/s/S/docs (273266f) ✗ tree implementation

implementation

├── blueprint-testing-guide.md

├── common-pitfalls.md

├── design-decisions.md

├── gamestate-architecture.md

├── gamestate-troubleshooting.md

├── index.md

├── testing-gamestate.md

├── ue5-patterns.md

└── windows-development.mdI started building up some toolchain using Claude Code to enable a typical agent workflow (evaluate conditions => write code => validate code). I knew I was going to be limited given how much of game dev is done in the editor, and how much is visual, but I had hoped I could build a lot of the systems in C++.

It helped me wire up a ue-dev.py Python script to improve cross-platform builds (e.g. the different paths/commands you need). If you’re not familiar, Unreal Engine provides a mechanism to patch compiled code without restarting the editor (called “Live Coding”), which seems to be the primary mechanism for C++ development. Little did I know this whole system was going to be my downfall as I wrapped up the first evening…

#Day 2-3: Nothing Works

The first day I was primarily working from the office on my Mac Studio. That evening, I decided to switch to my Windows machine for the remaining three days, thinking it would be more effective. I’ve got a dual monitor setup (which turns out is critical for working in UE) as well as huge amounts of memory and a high-end GPU. Unfortunately the OS transition posed more problems than I was anticipating…

The first issue? Claude Code doesn’t run on Windows. I typically work in WSL (tl;dr a Linux VM in Windows) and didn’t even consider this as an issue. My workaround was to network mount the WSL partition so I could load up the project in UE, build it on Windows, but keep working in Linux. That didn’t work at all as UE doesn’t seem to even function if you network mount the source code.

That led me to spending a few hours that morning getting Cursor up and running in a native Windows environment - an environment almost foreign to me. I had no access to Git (also needed LFS which is new to me), I had no access to my typical npm utilities (which I use with agents). So here I am on morning two churning through a ton of Windows packages…

To avoid articulating the immense rage I felt for a solid half of day two, what I ended up with that seemed functional was the following:

- git-scm (at least I can use real git commands)

- Cursor natively on Windows

- A python script to run build locally in the agent

Once I got that running I started working on technical implementation docs. I had toyed with an ECS architecture (Entity-Component-System) in the past, so I figured I’d try to implement that in Unreal given I at least knew how it was supposed to work. I knew I would need to feed it some prior art for it to do implementation well, so - as I do in just about every situation - I had the LLM “Google that for me”

Research state of the art ECS implementations in Unreal Engine 5.6. We want to enable maximal use of C++ for components, while giving control to our game designer to implement these components with Blueprints.

(that’s not quite the prompt, but you get the idea)

That ends up generating a bunch of docs, and I grind through an initial prototype implementation. At the end of day two I had a bunch of components wired up as blueprints and had begun learning more fundamental systems in Unreal. It seemed like it was going to work! A bunch of systems were logging their state, I had game timers, the progression system, and everything - in logs at least - working. The level detected when I was in certain zones, knew where the bug was, had environmental readings via some widgets. Things were going great! Until they weren’t.

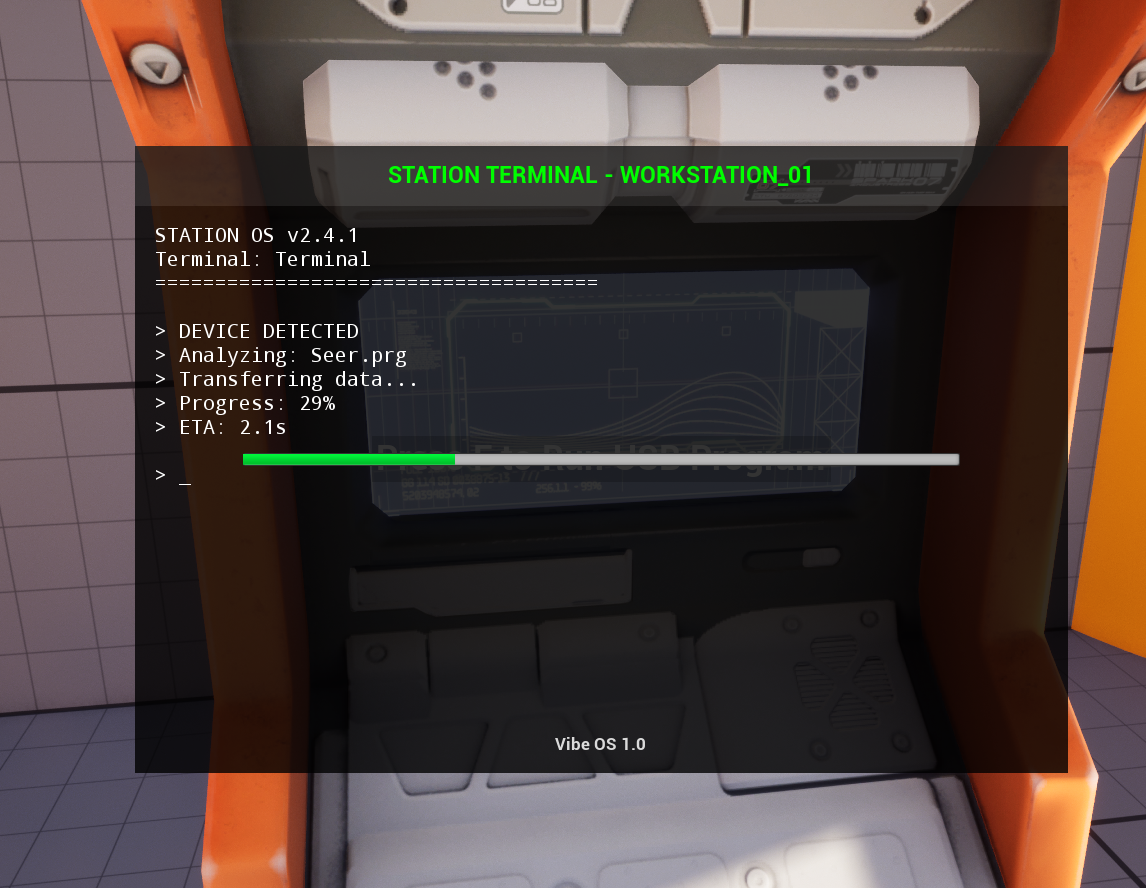

One of the key components of the game was the use of tools. The idea was you’d take something like a memory profiler (via a USB drive), plug it into a terminal, and then determine if the “bug” was active in that room, and if it was causing memory leaks. If it was, you’d have a clue of what kind of bug it was and be one step closer to achieving a win. This meant I wanted an item (a USB stick), one that you could pick up in the world, hold in your hand, and take to the terminal. I also wanted it so you could only have one item at a time, so you could drop an item, swap items, etc.

I got quite a ways through this implementation but every so often would get hung up on something. After banging my head against it, what I found to be routinely happening was the LLM would go off the rails - suggesting outdated APIs (expected) and more importantly, completely violating the system design we were aiming for. This became a problem once we started implementing more of the composite behavior (e.g. interaction between the USB drive and the Terminal). No matter how I tried, I couldn’t get it back on course.

I’d feed it in search results on how folks solve various inventory concerns, it’d try to address things, see they compile, but with no way to reasonably validate the results it’d end up not working. A great example I fought with for a while was the drop mechanic. I needed the item to go from being in the world, to being in the player’s hand, and then when dropped, placed back in the world (ideally via physics). There are a number of ways you can implement that in Unreal, and whenever there are a lot of ways to do something with little prior art, you end up with the agent struggling to correctly guess the right approach up front.

In parallel on day three I spun up a new variant of our project trying to fix a bunch of problems and to implement a formal Actor-Component approach. This seemed to be more commonly recommended in UE, and I was hoping I could find more prior art to get the agent to succeed here. It ultimately didn’t, so I threw out this work.

At the end of day three I was pretty frustrated and feeling extremely unaccomplished. I had gone from what felt like quick learning progress, getting some systems wired up, to spending hours trying to fix this single system.

#Day 4: Pivot

Going into day four I was still pretty discouraged. I didn’t know what was going wrong, and I was failing to find enough prior art to feed into the context windows (even ignoring when it goes off the rails). I also had chatted with ckj (my co-founder) and he mentioned that the Live Coding system wasn’t working as he was used to. I’m not familiar with it so I had no idea, but when he described how he was used to it and I looked into it it seemed clear it wasn’t working right.

-

It was trying to compile a ton of files, all the time, even with no changes. I didn’t notice this in Cursor as it wasn’t behaving the same.

-

More importantly, with my build scripts I’m not even sure if the build process was even updating the client (this was one of my biggest frustrations)

-

No matter what - the information I could find online wasn’t helping me diagnose the problem. I did discover (I think) that git just.. makes the whole thing worse for no reason whatsoever.

In general, this inability to have a functional build system was plaguing me. I had fought with it over the last few days, and at least had Cursor validating the code compiled most of the time which saved some effort. It usually ended up with a really common, annoying workflow though:

- Make a bunch of C++ changes

- Have no idea how I’m supposed to build correctly

- Reopen Unreal Engine

- Be very confused about whether my changes were reflected

- Lots of print statements

And really, the slowest iteration cycle you can imagine.

So we pivoted. We wanted to have something working, and our ambitious original project was wildly out of scope at this point.

We decided to cut the game down to a chase simulator - you’re escaping a creature, trying to collect some software to insert into the terminal (basically using some stuff I had at least gotten to work), and doing it before the creature captures you. Boring, but we were here to learn.

I was able to take my existing core components (my tools), whittle them down to just a couple of behaviors. I also used this time to swap back to Claude Code. My flows were already messed up and I wasn’t happy working under Windows native. I had Claude Code running on a network share in WSL, where it would write the C++ code. I’d then close the Unreal editor, compile the code with my Python script, and manually feed any compilation errors back into my terminal session with Claude.

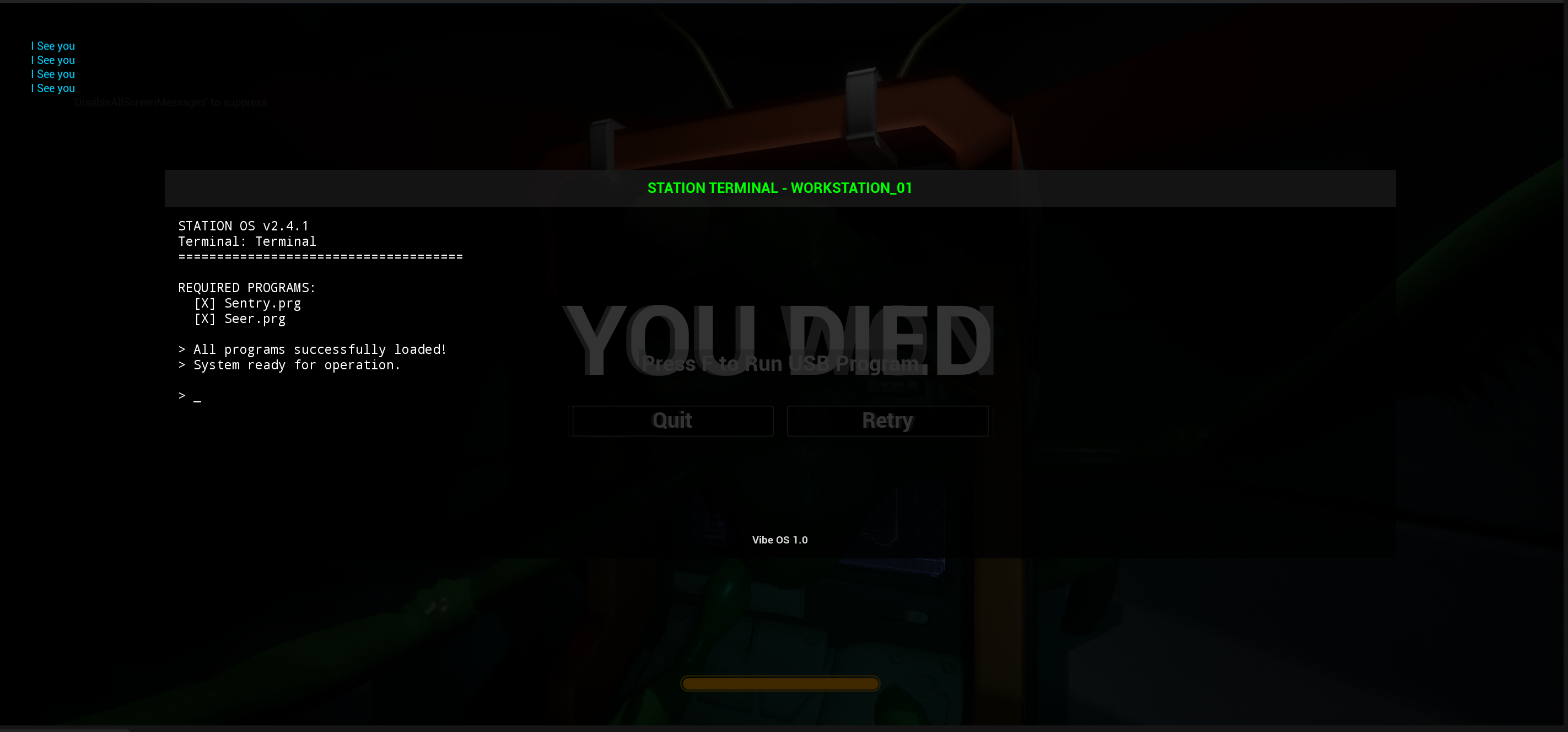

When I sufficiently scoped stuff, such as how the Terminal UI was supposed to work, things went ok. For example, I wanted it so when you activated the USB stick on the terminal it would show a loading indicator, simulate some basic terminal text (as if it’s loading). I was able to one-shot that kind of work with the Agent, but not much else. It still required a pretty slow iteration cycle.

We shipped a pretty jank MVP, and while I’m happy we got something out the door, it’s not what I was hoping to pull off. Ultimately I don’t think platforms like Unreal Engine are in a situation where they’re going to be able to easily take advantage of the recent advances in LLMs, and that makes me sad. They need to be able to work with modern toolchains, full validation loops with headless agents (terminal is a good litmus test), and move beyond these semi-walled ecosystems.