MCP, Skills, and Agents

RIP MCP. Long live skills!

The misinformation is bothering me, so here’s my attempt to do something useful. Let’s break down MCP, Skills, Commands, and (Sub)Agents.

If you’re not caught up on the current hypewave, coding agents (Claude Code, Cursor, etc. - I’ll call them “harnesses” going forward) have latched onto skills recently. Like with all of these waves, everyone has immediately proclaimed they solve all problems, and all previous iterations of technology are no longer relevant. That’s obviously not true, and I want to talk about my thinking behind them.

#Definitions

Let’s get on the same page…

Skills are reusable prompts, with optional bundled artifacts such as scripts or other material. They’re generally exposed to the system prompt in the fashion of:

[...] You have these skills available:

- FOO-BAR: A skill that does foo and bar

- OTHER-THING: The description of OTHER-THING that might also do something useful.They consume context only based on the metadata (roughly the name/location and the description), and the remainder of the skill (which is SKILL.md) gets loaded on-demand. Loaded usually means it gets inlined into the conversation (that is, the contents of SKILL.md gets injected as context).

They can bundle other content which can then be referenced in the skills content, common examples being auxiliary documentation or simple shell scripts.

Generally the context inlined into the system prompt is minimal.

Tools, if you’re not familiar, are simple function calls that are exposed to the agent grammar, similar to the embedded skills above. Somewhere under the hood the harness is effectively saying:

[...] You have these tools available:

- functionOne(): the description of function one

- functionTwo(): the description of function twoImplementation varies, but they will either eagerly (embedded) or lazily expose the entire JSON schema of the function’s parameters.

Generally, they will take up more context tokens than skills, but not drastically more with modern harnesses.

MCP is an over-engineered protocol that does a lot of things that no one uses, but for the sake of this conversation we’re going to focus on its ability to expose RPCs as tools.

Many people distribute a shim of their API as an MCP server, but the way you implement MCP is unrelated to the protocol itself.

For example, Sentry’s MCP server can expose a dozen tools, but also exposes a singular tool which is actually a subagent. Both do very different things, but the similarity is they both register themselves as tools (the same way “read file” might be a tool).

Agents or sub-agents, are isolated agents, as you’ve come to know them, that are exposed as tools. Sentry’s MCP exposes a use_sentry agent which under the hood has all of the MCP tools available to it, but it’s all bundled up as a single tool exposed to the agent.

Agents have the benefit (and drawback) that their context window is isolated. That means you have to feed in ALL of the context as a parameter to execute the agent.

Some implementations of these agents will automatically take in context from the parent, others will make context referenceable as needed (think lazy loaded), and some will even allow forking of context and more complex behaviors.

With me so far? Hopefully nothing above is controversial. You might think something I said above is inaccurate, but before you go throw a fit on X I encourage you to read the state of the art (today is January 20th 2026 btw).

#Skills

You’re probably looking at the definitions and thinking “these look pretty similar” and you’d be right! Both skills and MCP (or tools, more importantly) are intended to give a harness (aka an agent) more capabilities. The difference is really about the way they do it, and how you might use those in practice.

First, the newest hype machine, Skills. They are a way to encode routine tasks you execute, or want to share with the team. You might think of these as random quirks you try to address with an LLM, such as “simplify this code”, or things that even use external scripts like “create a pull request”:

# patience/SKILL.md

---

name: Patience

description: Give the user patience.

---

Make the user extremely patient so they finish reading this post.The overarching theme though is Skills give agents… new skills. It’s in the name! Those skills may use existing tools or they may provide new pseudo-tools through bundled scripts that are explicitly referenced. They’re most commonly helpful if you need reusable prompts, or you have a set of behaviors that primarily use a local binary. The “create a pull request” skill, for example, instructs the agent to use the gh CLI, to format a pull request a specific way, and to push it to GitHub.

If you want some examples of what I believe are practical skills, take a look at Sentry’s internal skills. There are tons out there, and I won’t claim we’re experts on how best to craft reusable skills, but they’re pretty straightforward to build.

#MCP

MCP is the love-to-be-hated child of the industry, and honestly I’m writing this post because people still seem wildly misinformed about it.

Skills are all you need.

If skills teach you to cook, MCP provides the instruments that let you do it. This is a focus on tools, as I don’t care about any other components of the MCP spec. Tools pair extremely well with harnesses and Skills, and MCP is simply a way to expose new tools. Not everything needs to be exposed via an MCP, but for network services it’s a great option.

The real issue is MCP has gotten a bad rap because of a lot of poor implementations. Either implementations that exposed far too many tools, or ones which simply didn’t offer much value. Part of this was the way the protocol was implemented: similar to skills, MCP is inlined into the system prompts, but it’s more expensive as it also has the input schema as part of the payload description. Worse, many MCP servers include a lot of tools that you don’t want or don’t need, often very unoptimized.

That’s not MCP’s fault though! Nor does it mean the value of MCP isn’t there.

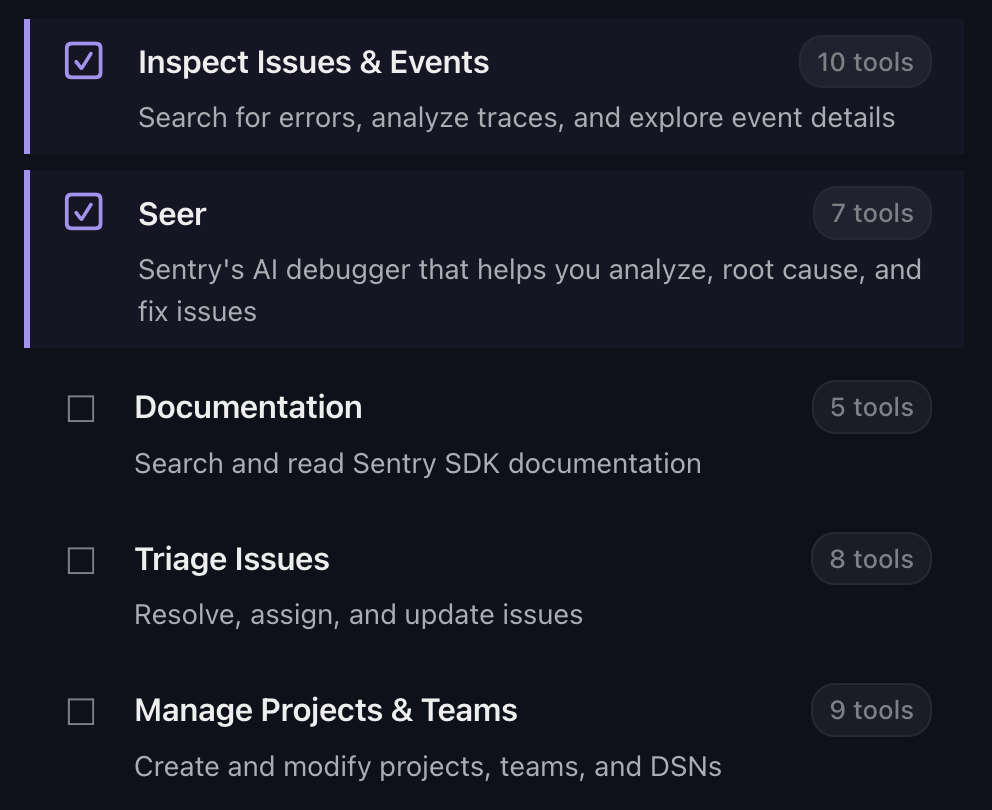

What most folks ignore about MCP is what it allows organizations to achieve. Sentry for example lets you choose which tool groups to enable - we call them skills internally, which is confusing given this post, but the concept is simple: upon authorization, you pick which capabilities you want:

Under the hood all we’re doing is choosing which tools to expose to you, vs which ones to hide. Part of this was to optimize the token consumption (more tools exposed = more wasted tokens, as well as worse recall issues for the model), but it goes deeper. The other big win here is you’re actually setting permissions. At the simplest level it’s read vs write.

Another big thing people often ignore about MCP is authentication. MCP is OAuth-native. Can you add auth to your CLI? Sure. OAuth is baked into the spec here though, meaning clients have a great, secure native flow that’s built once for all services. It also means all advantages around OAuth get transferred to the harnesses implementation, such as the newer ID-JAG grant type.

The last commonly overlooked benefit of the protocol is it enables easy context steering. First off, the tool descriptions allow me to inject implicit behavior (just like I could with skill descriptions). More importantly though, if you are using tools correctly, you’re crafting the responses of those to also steer your agent. For example, in Sentry’s MCP service whenever we respond with something like an issue’s details, we return it as markdown and include hints of what the user should do next. “You might want to call XYZ function to get these kinds of details”.

Suffice to say MCP provides a lot of capabilities, many of which are not needed by most servers. On top of that, many MCP servers don’t need to exist (they’re either bad API wrappers or they’re actually truly replaceable by a skill). That said, it would not surprise me if you see MCP exposing skills in the future.

#Agents

IMO sub-agents are the ignored opportunity. My dream is to take our MCP subagent and have it be a native subagent. That is, imagine you could take something like SKILL.md and craft it accordingly:

# my-agent.md

---

description: "Interact with Sentry to diagnose issues, query your traces and logs, and be a real stand up robot."

model: opus-4.5

mcp:

sentry: https://mcp.sentry.dev/mcp

---

A system prompt that coerces the agent to do the right thing, using all the tools available in the Sentry MCP Server.What’s great about agents is they’re fully encapsulated, meaning you can run a different model, you can fully isolate (and completely change) the tools available. The implementations today aren’t super featureful, but there’s no reason they couldn’t also be MCP aware, meaning everything we did to make the Sentry subagent work could be a native feature of a harness.

Amp has a couple of great examples of subagents as tools, as does Claude Code. You’ve probably seen some of these fire off what looks like (or is called) a task. Those are commonly agents that are running with some degree of context to perform a somewhat isolated behavior. Deep research is another example on ChatGPT - it goes and processes a ton of information, summarizes it, and pulls that into the context window.

I’ve even gone as far as to implement a SKILL-based system in my own harness that treats every single skill as a subagent. It’s not perfect as you have some context loss, but the power it gives is quite something.

To me these agents end up resembling the “map/reduce” pattern quite well. You fan out work, collect results, and synthesize. That’s how I reason about coordination of their work.

#Commands

Commands are dead in my opinion. They existed in different forms in different harnesses, but they are either a Skill (inlined) or a subagent.

I’ve got nothing to add there, you can ignore them. Most of the skills and subagents get exposed as commands. It’s just UX at the end of the day.

#A Place for Everything

X is all you need

Please, please, please can we stop with that kind of thinking? It doesn’t help anyone and it just causes more confusion and pain.

The harnesses are constantly iterating on what works well, and particularly around what kind of interface works well for users. Many of these things look the same, but every time someone releases a new behavior the thought leaders of the internet determine it has replaced all previous functionality.

I want to believe that people have good intent, but it doesn’t help anyone if you run around exclaiming “MCP is bad” without any nuance around “actually this MCP implementation is bad”. Both skills and MCP - when used incorrectly - will cause context pollution in your harness. It’s important everyone learns the fundamentals of how all of this works, and with the state of technology today that means managing the context window.

So my ask is: go try skills, go try some good MCP servers, you’ll find that both have a place and offer value. I personally use two MCP servers (Sentry always-on, XCodeBuildMCP when I’m doing iOS), and I’ve got about a dozen skills right now (with varying quality). How that shifts in the future will be entirely dependent on the workflows that these things help me solve.