Debugging OpenAI Errors

Recently I’ve been spending a lot of time worked on Peated. Ive taken the approach of somewhat intentionally over engineering it in order to learn new technologies, but also as a way to dogfood parts of Sentry. One of those technologies is ChatGPT. I thought it’d be interesting to fill in blanks in my database: details about bottles of whiskey, tasting notes, and even verifying some other data such as the type of spirit or location of the distiller. The results are what they are - that is it works and you probably can’t tell if its truth or not, but one thing that was frustrating along the way was the reliability of the model’s responses. You see, I want JSON, and I want that JSON to conform to a fairly specific spec in some cases.

It was actually fairly challenging to even get a reliable JSON output, but the approach I settled on there I’m quite happy with. I pass a Zod schema into the completions API as a function. I then fairly reliably get valid JSON out. Awesome! Fairly reliably != always, however, and while creating more consistency in the responses is an ongoing project, I wanted to share some of those learnings.

First, the Zod approach. Zod is a schema validation utility in Node, and its one that has a ton of support across other libraries. I’m using it to generate input and output schemas (jsonschema) in Fastify, I’m using it to generate Form schemas in react-hook-form, and I’m now using it to coerce ChatGPT into conforming results. Its pretty simple, so let me show you an example:

p.s. pardon my shit attempt to TypeScript

export async function getStructuredResponse<Schema extends ZodSchema<any>>(

prompt: string,

schema: Schema,

model: Model = "gpt-3.5-turbo",

): Promise<z.infer<Schema> | null> {

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

// https://wundergraph.com/blog/return_json_from_openai

const completion = await openai.chat.completions.create({

model: model,

messages: [

{

role: "user",

content: prompt,

},

{

role: "user",

content: "Set the result to the out function",

},

],

functions: [

{

name: "out",

description:

"This is the function that returns the result of the agent",

parameters: zodToJsonSchema(schema),

},

],

temperature: 0,

});

const structuredResponse = JSON.parse(

// eslint-disable-next-line @typescript-eslint/no-non-null-assertion

completion.choices[0].message!.function_call!.arguments!,

);

return schema.parse(structuredResponse);

}

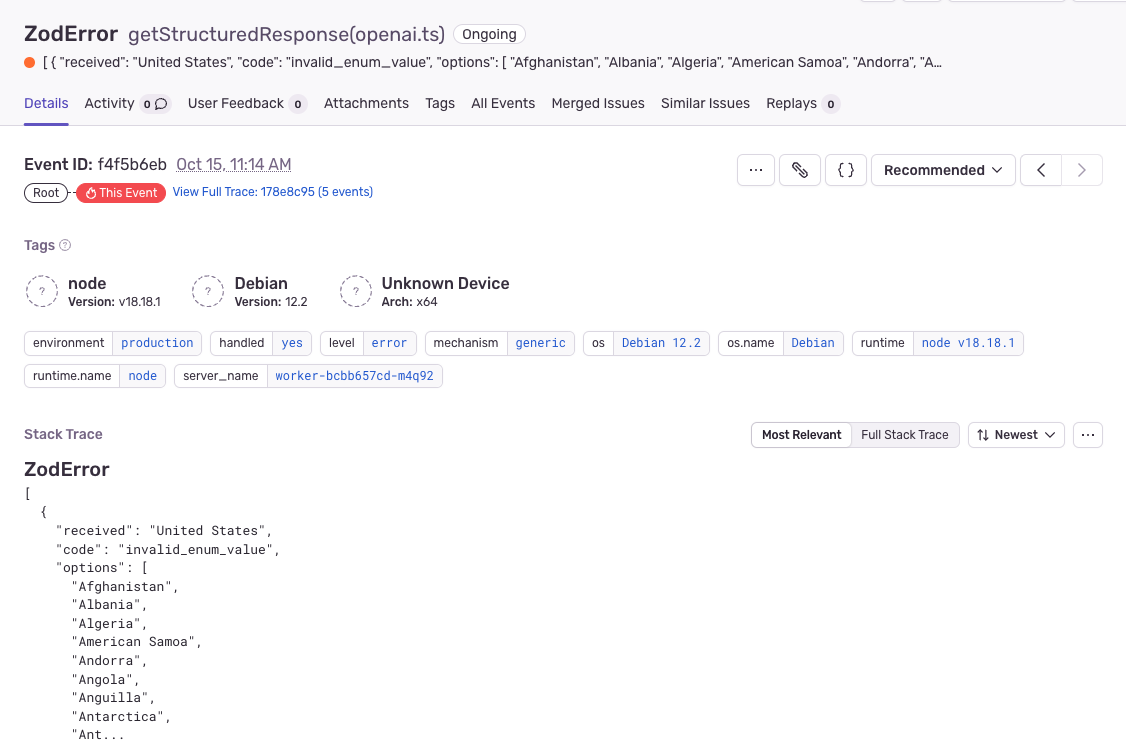

I then simply pass in my prompt and a zod schema, and I mostly get the results you’d expect. It’s not perfect however, as some times we end up with invalid schema, mismatched types, and in particular it completely ignores things like enums. In fact, any attempt I’ve tried to get it to “select an item from this list of choices” almost always ends up with it fabricating choices one way or another.

In both of those scenarios however I generate errors, and you’ll note above there’s no error handling. Given well, Sentry and all, seems kind of obvious we should so something about that

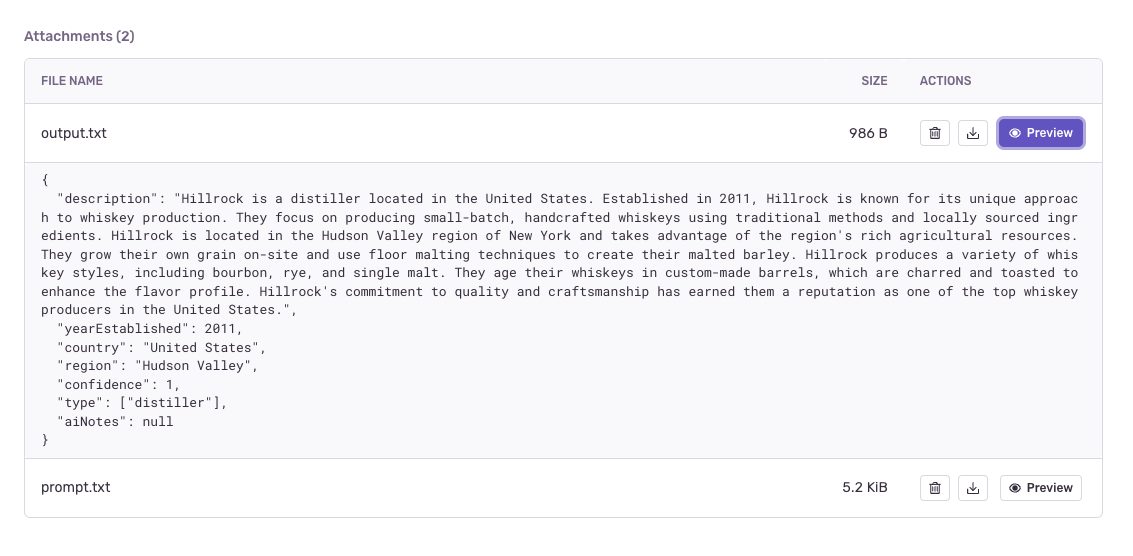

One of those challenges in using this kind of technology is I need large payloads as inputs as well as large payloads as outputs. That means Sentry’s typical approach of “heres a useful stack trace with some light context” isn’t remotely good enough. Fortunately we have a little known API called Attachments, which exactly as it sounds, allows you to attach files to events. We use this for a lot of our native apps where you may want the raw crash dump kept around to load up in your local debugger. In our case however, we simply want it to capture the input and output for validation errors so that we can more reliably understand when and why things fail. Doing that’s not as easy as it should be in Sentry, but here’s what an example of a helper:

import {

captureException,

captureMessage,

withScope,

} from "@sentry/node-experimental";

export function logError(

error: Error | unknown,

attachments?: Record<string, string | Uint8Array>,

): void;

export function logError(

message: string,

attachments?: Record<string, string | Uint8Array>,

): void;

export function logError(

error: string | Error | unknown,

attachments?: Record<string, string | Uint8Array>,

): void {

withScope((scope) => {

if (attachments) {

for (const key in attachments) {

scope.addAttachment({

data: attachments[key],

filename: key,

});

}

}

if (typeof error === "string")

captureMessage(error);

else

captureException(error);

});

console.error(error);

}

This exposes two key parameters: contexts (which are arbitrary key/value mappings for additional data), and attachments. It exposes it in a fairly simple API to avoid littering your codebase with the Sentry abstractions:

logError(err, {"prompt.txt": "prompt"})

In practice this is actually working quite well, and the above example ends up looking like this with our error handling:

try {

const structuredResponse = JSON.parse(

// eslint-disable-next-line @typescript-eslint/no-non-null-assertion

completion.choices[0].message!.function_call!.arguments!,

);

return (fullSchema || schema).parse(structuredResponse);

} catch (err) {

logError(

err,

{

"prompt.txt": prompt,

"output.txt": `${

// eslint-disable-next-line @typescript-eslint/no-non-null-assertion

completion.choices[0].message!.function_call!.arguments

}`,

},

);

return null;

}

What’s great about this is Sentry is type-aware for attachments, and in the case of a text file, it generates a nice easy to consume preview pane in the UI:

This has been fairly useful as I continue to tweak the prompts, and we’ll likely be exploring this rabbit hole as a more first-class citizen in Sentry in the near future.

Anyways, if you’re curious about the results of this, you can take a look at this example of High West Double Rye or the Yamazaki Distillery. I’m still exploring other ways to improve the reliability of the prompts, and also areas where it could provide additional utility. This is also all open source on GitHub if you want to dig in a bit more.